Student engagement has long been recognized as key to academic success. Most research, however, has focused on engagement generally, across the school setting.

Quantitative psychologist Ying “Alison” Cheng is working to better understand the link between student engagement and learning outcomes in a specific course — and how adaptive testing can help.

With funding from a 2014 Early Career Development Award from the National Science Foundation, Cheng has now developed a computerized adaptive testing system for high school students taking advanced placement (AP) statistics and is exploring the relationship between feedback and engagement.

“Research has shown that providing feedback is crucial to improving skill acquisition, especially when instruction is done in a computer-based fashion,” she said. “As more and more online resources are being offered, this could have a big impact down the road.”

The project, which will be completed in summer 2020, has already shown promising results and has led Cheng to develop a similar assessment program for non-AP statistics students, with the support of $1.4 million in funding from the Institute of Education Sciences.

“Statistics is such a pervasive, important subject — it affects everyone,” she said. “Non-STEM majors will also require statistical literacy, so we think it is very important to take this project beyond the AP population.”

‘Traffic light’ report

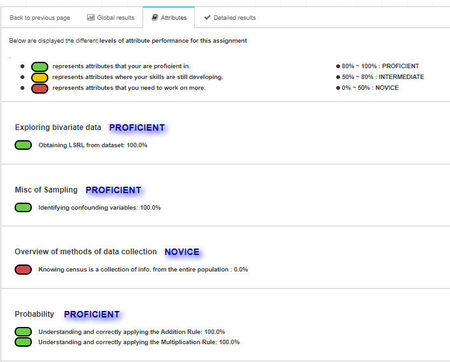

Students and their teachers receive a "traffic light" report for each attribute directly after the test.

Students and their teachers receive a "traffic light" report for each attribute directly after the test.

Cheng, also a fellow at the Institute for Educational Initiatives, began by examining statistics course material and identifying 157 specific concepts, or attributes, students need to learn. Her team analyzed how existing test questions matched up with those attributes and recruited experts to write more — creating a bank of about 800 questions, each labeled with the attributes it measures.

Working with the Center for Social Science Research, they developed the testing interface and began to collect pilot data. In the project’s third year, they launched the testing system in six schools — representing a range of socioeconomic levels in urban, suburban, and rural areas — and used the data to begin designing individual diagnostic reports.

“Adaptive testing allows you to give tailored assessments to each person,” Cheng said. “So, instead of just giving students a score of 80 out of 100 questions, we are able to provide a scoring profile that says, ‘you’re strong in each of these areas, but you’re struggling with these concepts.’”

Through Cheng’s system, students — and their teachers — receive a “traffic light” report for each attribute directly after the test, showing green if they’ve mastered the skill, yellow for borderline, and red if they need to spend significantly more time on that material.

“I was very glad that teachers find it useful as well, especially the feature where they are able to select questions that tap into a certain attribute at a detailed level,” Cheng said. “They can see a class profile, as well as individual student profiles, which tells them if there is something they need to focus on more with the entire group.”

Surprising findings

Throughout the project, Cheng’s team has also been conducting research on three major components of student engagement — affective engagement, or how interested students are in the material; behavioral engagement, or how much time they spend studying and completing homework; and cognitive engagement, or how well students can apply the knowledge and skills they gain to real-world problems.

The results, which were somewhat surprising to Cheng, showed that cognitive engagement was most important in predicting the differences in learning among students.

“If you’re able to have that higher-order thinking and connect the dots between things you learn in different classes and between what you learn in class and real life, that is what really matters,” she said.

“If you’re able to have that higher-order thinking and connect the dots between things you learn in different classes and between what you learn in class and real life, that is what really matters.”

In her study, behavioral engagement was, remarkably, not a significant predictor of success, although Cheng theorizes that that may be because AP students already have a high level of behavioral engagement. Her next project focusing on non-AP students will shed more light on that finding.

Another surprising finding concerned issues of identity — whether a student identifies themselves as someone who does well at statistics and could pursue a career in the field — and how it varied between gender groups.

“It was really astonishing — the girls and boys do equally well on the assessments, get equal amounts of teacher support, and are equally engaged. But the girls are less likely to say, ‘I am going to pursue statistics as a career’ or ‘I am statistician material,’” she said. “Even among a group of students who self-selected an AP course, the difference was still there.”

Identifying interventions

Cheng with members of her lab

Cheng with members of her lab

Cheng’s team is also focused on community outreach and has hosted training and workshops for more than 80 teachers so far. In their final year, they plan to scale up the adaptive testing program to make it available to the community at large.

The testing system may be a particularly important tool for under-resourced schools in lower socioeconomic areas, where Cheng said students are less likely to take the AP final exam.

The project has laid the groundwork for future research, she said, including projects that expand the testing system to other types of classes, explore issues of identity and gender, and identify interventions teachers can use to improve engagement.

“It is very exciting from a researcher’s perspective to understand what’s going on in terms of engagement,” she said. “This has identified where we should target interventions to promote learning. It could be introducing more real-life problems into classes or including more hands-on projects — that’s something we want to explore further. But this was a very important first step.”

“It is very exciting from a researcher’s perspective to understand what’s going on in terms of engagement. This has identified where we should target interventions to promote learning. It could be introducing more real-life problems into classes or including more hands-on projects. But this was a very important first step.”